Meta just took a massive leap in the AI arms race, quietly launching a new initiative called Superintelligence Labs—and it’s already making waves across the tech world.

What Is Superintelligence Labs?

Superintelligence Labs is Meta’s elite, secretive team tasked with building the next generation of AI—specifically, a multimodal system that can process and reason across text, image, voice, and video. The goal? Create a universal AI assistant that rivals anything currently available from OpenAI, Google DeepMind, or Anthropic.

Who’s Behind It?

Meta pulled out all the stops in recruiting this team. Leading the charge are:

- Alexandr Wang – Founder of Scale AI, known for his work in data labeling and synthetic data.

- Nat Friedman – Former GitHub CEO and a major force in open-source AI acceleration.

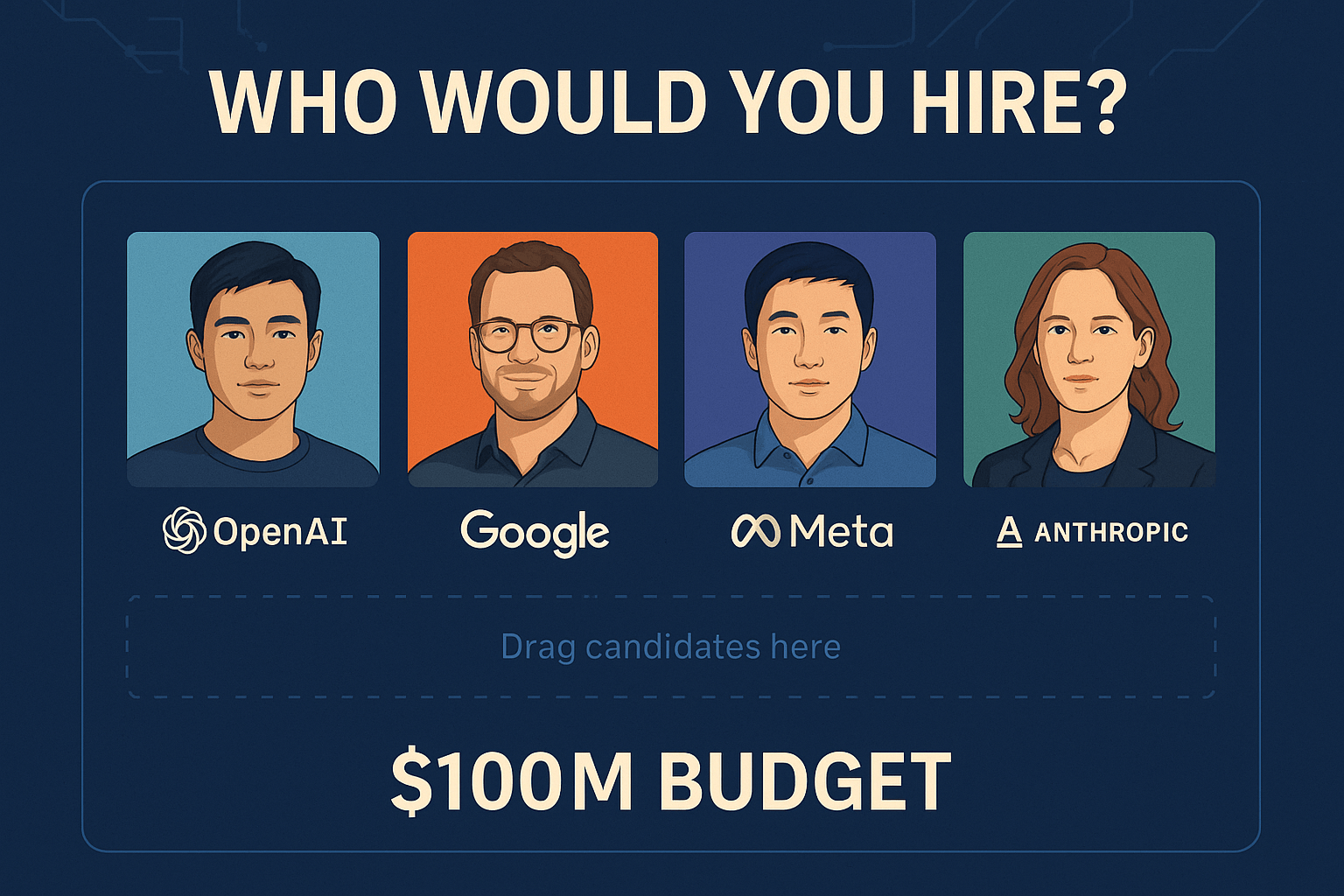

They’ve also brought in heavyweights from top AI labs:

- Former OpenAI scientists and engineers

- Google DeepMind alumni

- Experts in synthetic data, post-training tuning, and multimodal alignment

Some recruits are reportedly being offered signing bonuses up to $100 million.

Why It Matters

- Raises the Stakes – Meta is signaling it wants to go toe-to-toe with OpenAI and Google—not just in research, but in product-ready superintelligence.

- Multimodal Mastery – The team is focused on creating AI that understands and interacts using multiple data types, a key leap from today’s mostly text-based systems.

- Talent Wars Heat Up – With nine-figure compensation packages and mission-driven recruitment, Meta is intensifying the global race for top AI minds.

- Full-Stack Ambition – Unlike some rivals, Meta controls the full stack—hardware (via custom chips), data (via its platforms), and research—giving it a potential edge.

The Bigger Picture

While Meta’s Llama models are already well-regarded in the open-source community, this new initiative represents a strategic pivot toward closed, productized, consumer-facing AI. It echoes OpenAI’s GPT, Google’s Gemini, and Anthropic’s Claude efforts—but with a much more aggressive push to own both the platform and the assistant layer.

This could reshape the future of AI not just as a tool, but as an ever-present interface for work, entertainment, and everyday life.

Key Takeaways:

- Meta’s “Superintelligence Labs” is its most ambitious AI move yet.

- The lab is focused on building a truly multimodal, personal-level AI.

- Recruits include top talent from OpenAI, Anthropic, and Google.

- Signing bonuses reportedly hit $100 million.

- Meta aims to be a full-stack AI powerhouse, not just a research player.

Stay tuned—Meta’s AI revolution is just getting started.