A major new update from OpenAI has just landed, and it’s a game changer:

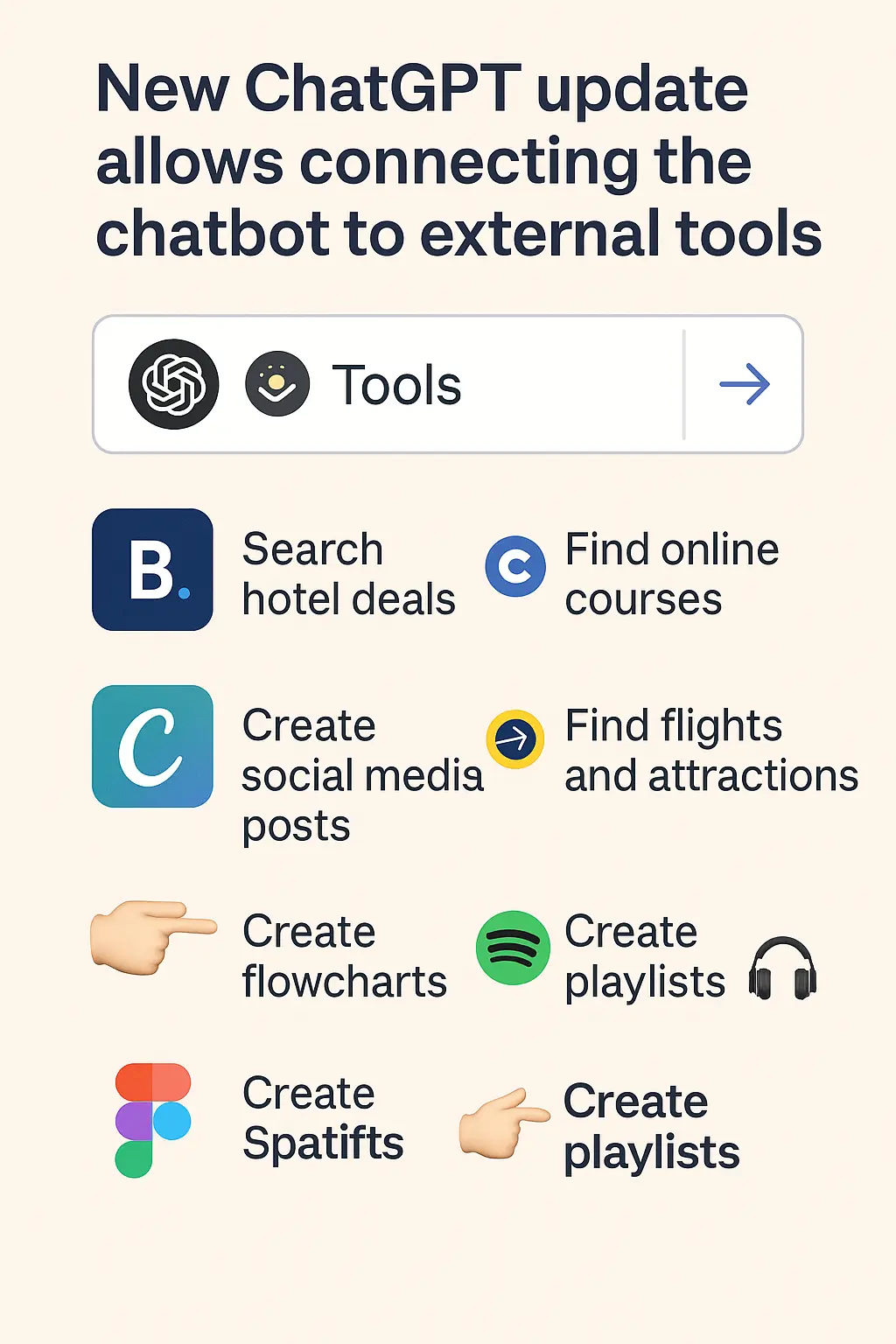

⬆️ You can now connect ChatGPT to external tools directly within the chat – just type “@” and select the tool you want to use.

What’s New?

Until now, ChatGPT mostly stayed within the boundaries of the conversation: it could search the web, summarize articles, and help you write – but couldn’t take action in other apps.

That just changed.

With this new update, ChatGPT can now “talk” to external apps like Spotify, Canva, Expedia, and more – all without leaving the chat window.

Here’s What You Can Do:

- 📍 Booking – Find great hotel deals directly in the chat.

- 🎨 Canva – Design social media posts, carousels, and graphics without leaving ChatGPT.

- 📅 Coursera – Discover digital courses across any subject.

- ✈️ Expedia – Search for flights, hotels, and travel experiences.

- 🌀 Figma – Create flowcharts and designs directly in the chat.

- 🎵 Spotify – Connect your account and get personalized playlists in seconds.

Good to Know:

⚠️ This feature is currently only available on the desktop web version.

The future is here: ChatGPT is evolving from a smart assistant to a powerful personal agent that doesn’t just respond – it acts.

Want to try it? Log in to ChatGPT on desktop and type “@”.