By AI Trend Scout | June 20, 2025

In a dramatic move that could reshape the intersection of technology and national defense, OpenAI has secured a contract worth up to $200 million with the U.S. Department of Defense (DoD), marking a significant departure from its prior stance against military applications.

A Strategic Shift

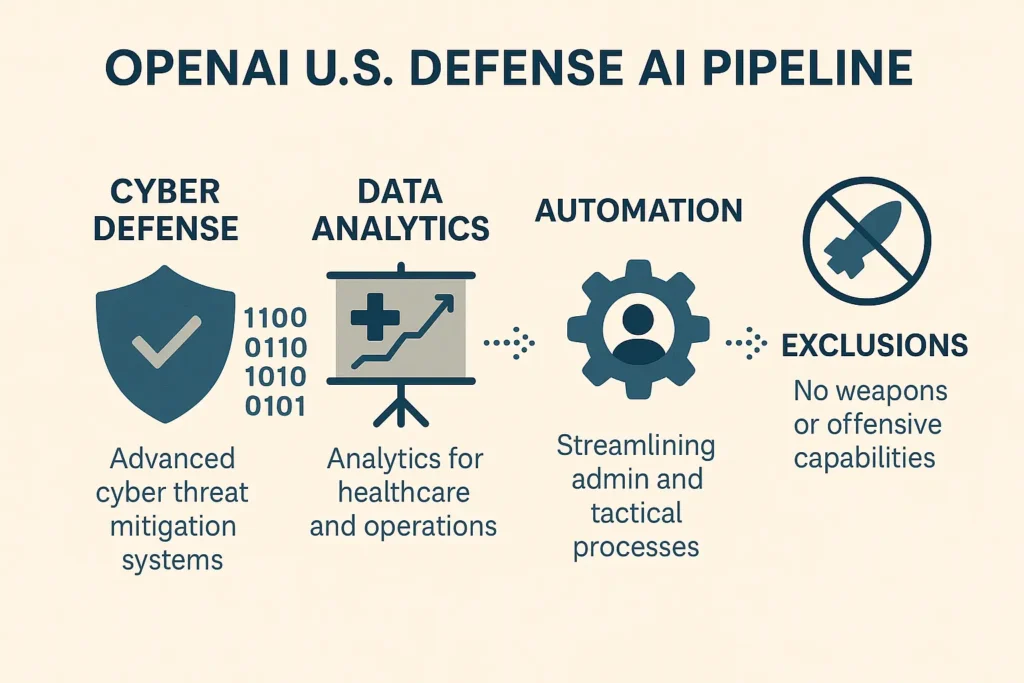

The contract encompasses a range of non-combat applications: advanced cyber defense tools, data analysis for healthcare and logistics, and intelligent automation to support administrative and battlefield readiness operations. OpenAI emphasized that none of the work involves weaponry or offensive AI systems.

This collaboration, made public on June 18, is already stirring conversation across Silicon Valley and Capitol Hill.

“This isn’t about building weapons,” said Mira Murati, CTO at OpenAI. “It’s about enhancing our nation’s defense infrastructure responsibly with state-of-the-art AI.”

OpenAI, which updated its usage policy in 2024 to allow select defense collaborations, is now joining tech giants like Microsoft and Google who have been gradually expanding into this arena.

The Bigger Picture: AI Arms Race

This partnership reflects the U.S. government’s increasing urgency to stay ahead in what some are calling an “AI arms race” against rising global powers. By partnering with a top-tier research lab like OpenAI, the DoD signals a strategic intent to deploy safe, cutting-edge generative AI in defense-critical sectors.

“China is not pausing its AI efforts for ethical debates,” noted Jessica Reznick, a policy advisor at the Center for a Responsible Digital Future. “This contract shows the U.S. doesn’t intend to either—but it wants to lead with accountability.”

Ethics & Boundaries

OpenAI’s leadership was quick to reaffirm its red lines. A company spokesperson confirmed the partnership is bound by “strict ethical review processes,” and includes external oversight to ensure the AI systems are not used in offensive military contexts.

This effort mirrors ongoing discourse around “Responsible AI”—a growing field focused on applying transparent, secure, and fair AI principles to high-stakes sectors like defense, law enforcement, and healthcare.

Community Reaction

Within the AI research community, opinions are split. Some fear this sets a precedent for broader militarization of AI; others view it as a pragmatic step given geopolitical realities.

On X (formerly Twitter), AI researcher and ethicist Dr. Emily Yuen shared:

“I’m torn. This could accelerate safe, civilian-benefiting AI under military funding—but also risks normalizing AI militarization under vague ethical claims.”

What’s Next?

Expect ripple effects:

- More startups may seek defense contracts.

- Academic labs could face funding dilemmas over military ties.

- OpenAI’s competitors, like Anthropic and Google DeepMind, may soon unveil their own defense-focused partnerships.

Bottom Line

This $200 million contract could represent a new frontier—not just for OpenAI, but for the entire AI industry. It underscores how foundational AI is becoming in global security conversations, and how the lines between civilian innovation and military application are rapidly blurring.