Published: 29 July 2025

Reading time: 4 min

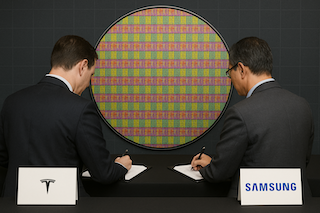

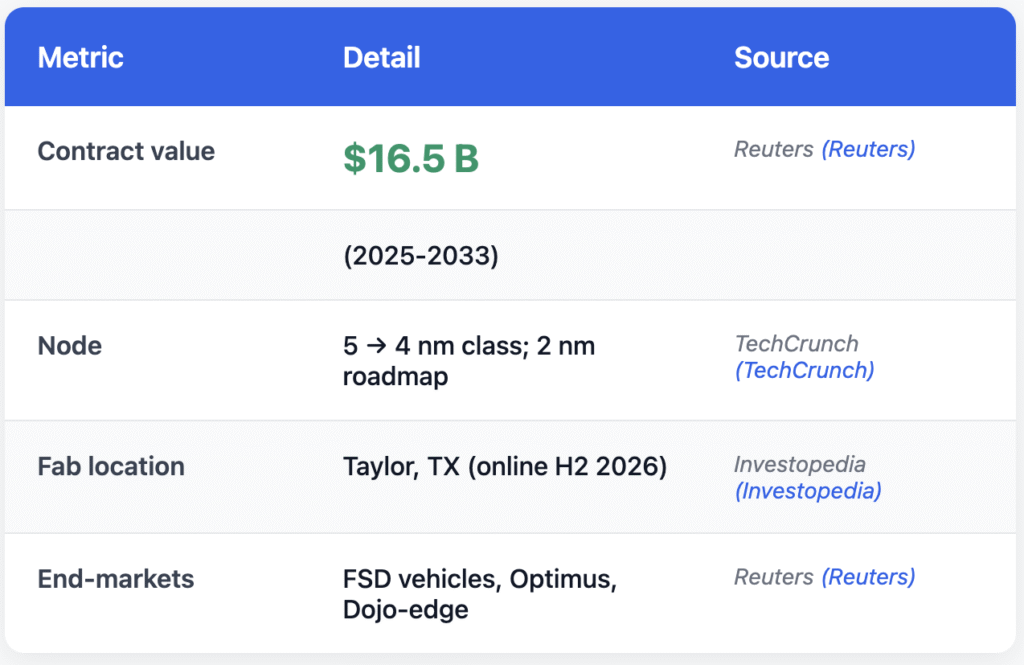

Tesla and Samsung have confirmed a $16.5 billion, decade-long pact for Samsung’s new Taylor, Texas megafab to manufacture Tesla’s AI6 system-on-chip—the silicon brain slated for next-gen self-driving cars, Optimus robots, and Dojo edge servers.

Why the deal is a watershed moment

- Performance leap: Early specs point to 2× TOPS-per-watt over the current AI5, shrinking Tesla’s in-vehicle compute stack to a single board. TechCrunch

- Supply-chain resilience: U.S. production trims trans-Pacific risk and dovetails with CHIPS-Act incentives. Reuters

- Samsung’s foundry comeback: A marquee client could help the Korean giant close its technology gap with TSMC. The Guardian

What Tesla gains

- Thermal headroom for single-board inference—critical for the 2027 robotaxi launch window.

- One-day shipping: 40 km highway hop from Taylor fab to Giga Austin.

- Co-development foothold: Musk says Tesla engineers will “walk the line” to tune yields. Reuters

What Samsung gains

- Flagship U.S. customer to validate its $37 B Texas investment. KED Global

- CHIPS-Act leverage: Tesla volume strengthens Samsung’s case for extra subsidies.

- Automotive reliability halo for future clients (Mobileye, Nvidia Drive).

Policy & market lens

Washington pushes “friend-shoring” to cut reliance on Asian fabs; Tesla is now the first major EV maker to localize advanced AI silicon. Samsung shares spiked +6.8 %, while Tesla closed +4.2 % on the news. Reuters

“Samsung likely sacrificed margin for mind-share—the reputational upside is enormous.” —Ryu Young-ho, NH Invest. The Guardian

Road-map to rollout

| Date | Milestone | Impact |

|---|---|---|

| Q1 2026 | Taylor fab ramps | First AI6 wafers |

| H2 2026 | Model Y refresh ships with AI6 | Real-world validation |

| 2027 | Tesla robotaxi launch target | AI6 mandatory |

| 2028-33 | Node shrinks to 2 nm | Efficiency gains |

Next steps for readers

- Deep dive into Dojo architecture → see our [Dojo AI Explained] guide (internal link).

- Track U.S. fab subsidies → follow [CHIPS-Act Tracker] (internal link).

- External reference: Reuters full story (opens in new tab).

Bottom line

The Tesla AI6 chip deal marries hardware ambition with industrial policy. If Samsung hits yield targets, Tesla secures the silicon it needs for scalable autonomy, while the U.S. gains another advanced-node anchor—shrinking the geopolitical risk baked into every self-driving mile.